Tips for Semantic Data Modeling: Insights from 9 Data Experts

We are seeing a resurgence on the emphasis of data modeling after its brief hiatus between 2010-2020, when many companies opted to utilize data lakes for speed of execution. Despite the decades of work in data modeling, it’s still one of the most difficult and nuanced aspects of working with data, where the default for best practices is “it depends.”

While there's a unanimous agreement for some strategies, we have seen stark differences in opinions around some controversial topics as well. Thus, we are excited to launch the first Data Quality Camp (DQC) newsletter post with an awesome panel of data experts and leaders, within DQC, who have generously shared their experiences and knowledge to benefit the data community at large.

Some of the topics we cover in this post are the following:

Wide Tables vs. Kimball's Star Schema

Opinions on Inmon vs. Kimball

Utilizing GUI abstracts to serve data

Enabling the Data Mesh Architecture

Avoiding over-engineered data models

And much more…

Hold your seats and get ready to journey through the perspectives of some amazing minds in data. Learn first-hand what they are thinking about age-old practices and the latest developments in data modeling.

Ergest Xheblati- Data Architect & Author of Minimum Viable SQL Patterns

Josh Richman- Senior Manager of Business Analytics at FLASH

Juan Sequeda- Principal Scientist at data.world and co-host of Catalog & Cocktails

Juha Korpela- Chief Product Officer at Ellie Technologies

Michael Greaves- Engineering Manager at Klarna

Mike Lombardi- Senior Data Architect at Farfetch

Sarah Floris- Senior Data & ML Engineer at Zwift

Shane Gibson- Chief Product Officer & Co-Founder at Agile Data

Timo Dechau- Founder & Data Designer at Deepskydata

Available Substacks of the contributors:

Please note that most excerpts below are summarized versions of the original quotes, curated for ease of readability and compactness. Feel free to double-click or zoom in for image clarity.

Wide Tables vs. Kimball's Star Schema

Neutral Perspectives

This is not a straightforward choice. It largely depends on the use case the model is trying to solve.

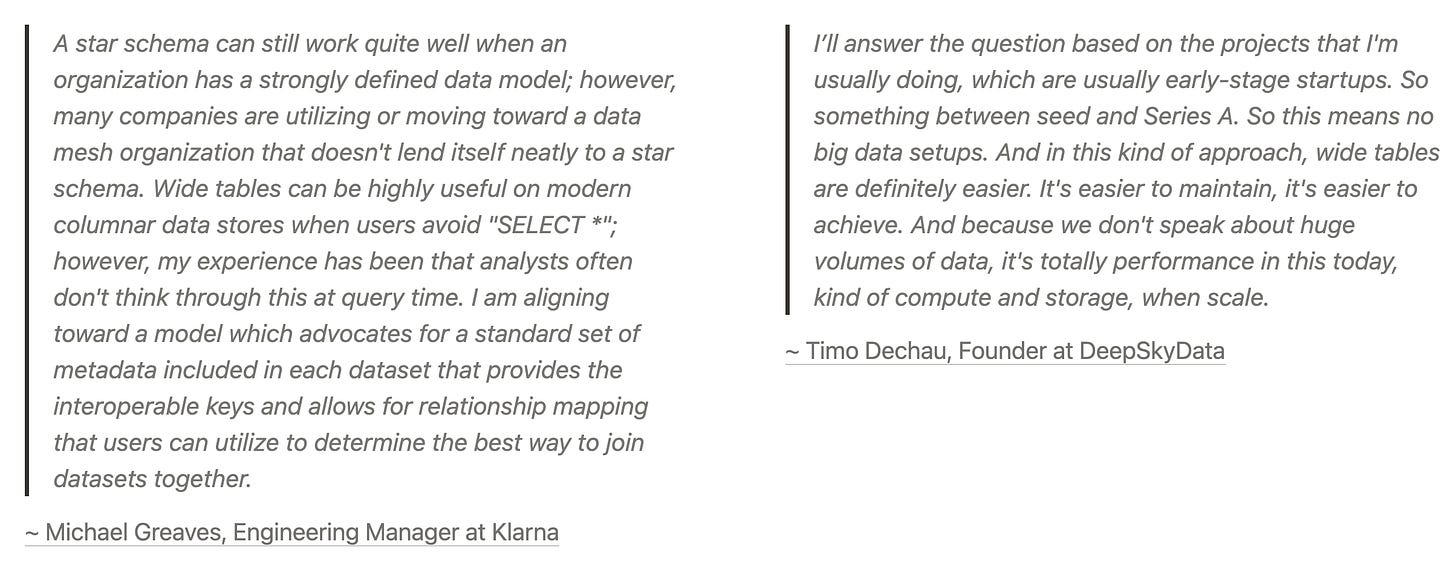

For Wide Tables

However, with the Data Mesh design at the next turn, it seems that the Star Schema would have limited use. Moreover, it doesn’t seem to be a good fit for small-scale projects.

Against Wide Tables

But the Wide Table format also holds on to its set of drawbacks.

In the grand Inmon vs. Kimball battle, which side are you on and why?

As Ergest would put it, “there really isn't a battle here. Inmon says Kimball-style dimensional models are essentially data marts in his approach.”

Neutral Perspectives

For Kimball

Business users love GUI. What are your suggestions on building GUI abstracts that enable them to imbibe business logic into data models & prevent them from impacting physical data?

Sees high potential in abstractions

Mindset Change

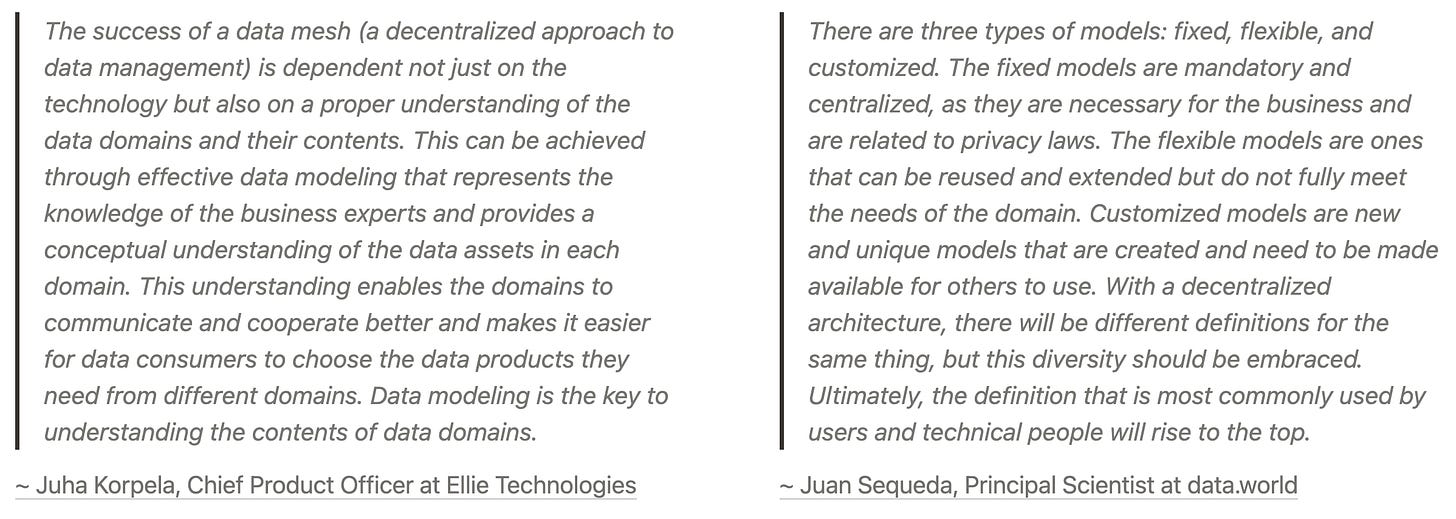

Suggestions on enabling the data mesh architecture through data modeling practices to facilitate domain ownership

Amalgam of people, processes, and technology

Identify clear demarcations

Theoretically sound, but a challenge implementation-wise

Recommendations on Data Vault 2.0

Enterprises are suffocating with complex, rich, yet unused data. How could such organizations simplistically approach the development of primary data models to facilitate loosely-coupled domain interactions?

Re-usable core and use-case-driven limbs

Domain Segregation

Recommendations on reducing data modeling waste generated as a result of over-engineered data models

Prioritization of business logic

Imbibe culture and practice of data cataloging

What are your thoughts and tips on including business calculations as part of data models?

Deeply embedded metrics

Against inclusion of metrics

For inclusion of metrics

Segregation is key

How to automate model validation and data verification? Do you see data contracts playing a role here to validate quality and governance policies for data that the models surface up?

Seeks standardization of contracts

For Contracts

Workarounds

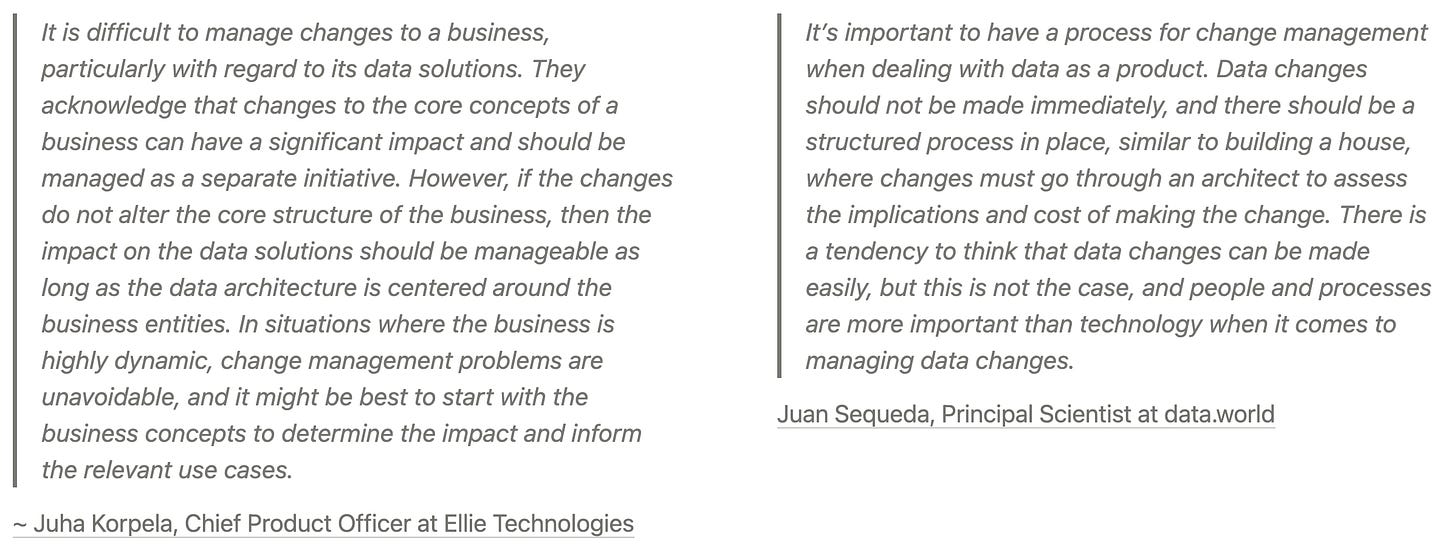

How do you optimize change management in data models, especially for cases where business logic is dynamic? What are your suggestions on the process of communicating such changes downstream?

Recommended Processes

Impact-based call

In spite of taxonomy standards and guidelines, naming conventions are often violated, creating data discrepancy and cross-functional confusion. What are your tips on enforcing naming patterns and semantics?

Templatize

Instill Guidelines

Culture Transformation

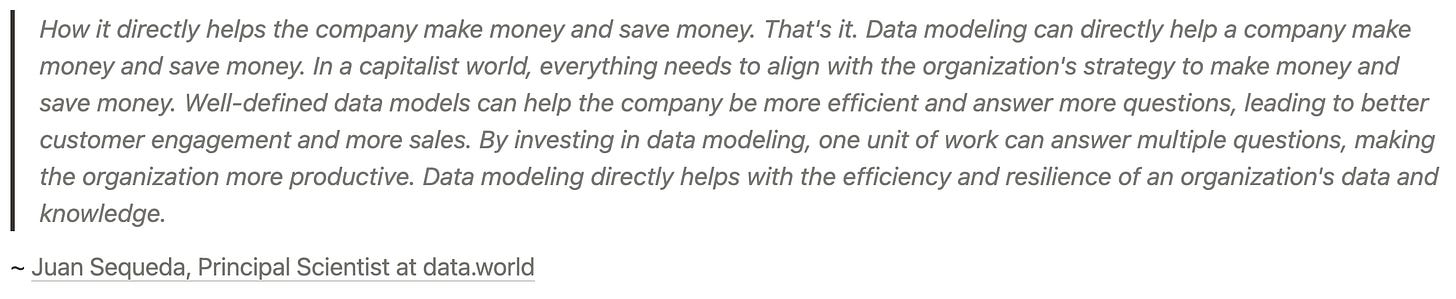

What metrics would you suggest to understand the success of data models over time?

Usage-based metrics

Efficiency Metrics

ROI Metrics

We’ll wrap up this panel piece with the hope that it was helpful and illuminating. Watch this space for the next one, and feel free to drop your suggestions on topics you’d like to hear more about!

Interested to feature on the next DQ Community Panel Release? Drop a message on LinkedIn or reach out over mail.

A huge thank you to our panel of experts:

Ergest Xheblati- Data Architect & Author of Minimum Viable SQL Patterns

Josh Richman- Senior Manager of Business Analytics at FLASH

Juan Sequeda- Principal Scientist at data.world and co-host of Catalog & Cocktails

Juha Korpela- Chief Product Officer at Ellie Technologies

Michael Greaves- Engineering Manager at Klarna

Mike Lombardi- Senior Data Architect at Farfetch

Sarah Floris- Senior Data & ML Engineer at Zwift

Shane Gibson- Chief Product Officer & Co-Founder at Agile Data

Timo Dechau- Founder & Data Designer at Deepskydata

Available substacks of the contributors:

Authors Connect

LinkedIn | SubstackSamadrita is an Advocate for the Data Operating System, a unified infrastructure pattern to enable flexible implementation of disparate data design architectures such as meshes and fabrics. She works closely with data leaders in the community to channel valuable insights and build impactful relationships.

LinkedIn | SubstackChad is the Chief Operator of the Data Quality Camp, the fastest-growing data quality community on the internet. He is a leading voice in the data industry and is actively involved in evangelizing and influencing data contracts - the pivoting architectural pattern that declaratively enables data quality and reliability.

What an amazing collab! Thank you for all the insights.

https://open.substack.com/chat/posts/4528ae66-9b6d-4884-a421-946345eed86d?utm_source=share&utm_medium=android&r=36o4ud